DirectX 11/12 request [merged]

GW2 is not the same as GW1. It’s more complicated so will take many more resources than its predecessor. And all this for minimal mprovements.

You also need to realize that not all games are designed the same. When games have upgraded to a higher DX version, have they all received the EXACT same percentage boost in performance? No, they did not as evidenced by the numerous benchmarks in the past. What may boost performance greatly in one game may barely do so in another. It comes down to how it was designed (remember that GW2 is CPU intensive). And as the dev stated in the Reddit thread, going to DX12 will provide minimal improvements as the issue people are experience resides outside of the render thread.

The dev is an expert in their field with years of experience. They do not need to cite a source and especially since they were talking about GW2 specifically which there will not be a source for. Well not unless they released the source code but good luck with that.

Because he is an expert or have experience it doesn’t mean he is 100% right! and nothing to back his claims, no benchmarks that can be collaborated.

“ANet. They never miss an opportunity to miss an opportunity to not mess up.”

Mod “Posts created to cause unrest with unfounded claims are not allowed” lmao

(edited by JediYoda.1275)

GW2 is not the same as GW1. It’s more complicated so will take many more resources than its predecessor. And all this for minimal mprovements.

You also need to realize that not all games are designed the same. When games have upgraded to a higher DX version, have they all received the EXACT same percentage boost in performance? No, they did not as evidenced by the numerous benchmarks in the past. What may boost performance greatly in one game may barely do so in another. It comes down to how it was designed (remember that GW2 is CPU intensive). And as the dev stated in the Reddit thread, going to DX12 will provide minimal improvements as the issue people are experience resides outside of the render thread.

The dev is an expert in their field with years of experience. They do not need to cite a source and especially since they were talking about GW2 specifically which there will not be a source for. Well not unless they released the source code but good luck with that.

Because he is an expert or have experience it doesn’t mean he is 100% right!

He does with how this game was built which is what I was getting at. I was not talking about just his experience with the subject matter alone. Not all games receive the same boost in performance when upgrading to a higher DX version.

GW2 is not the same as GW1. It’s more complicated so will take many more resources than its predecessor. And all this for minimal mprovements.

You also need to realize that not all games are designed the same. When games have upgraded to a higher DX version, have they all received the EXACT same percentage boost in performance? No, they did not as evidenced by the numerous benchmarks in the past. What may boost performance greatly in one game may barely do so in another. It comes down to how it was designed (remember that GW2 is CPU intensive). And as the dev stated in the Reddit thread, going to DX12 will provide minimal improvements as the issue people are experience resides outside of the render thread.

The dev is an expert in their field with years of experience. They do not need to cite a source and especially since they were talking about GW2 specifically which there will not be a source for. Well not unless they released the source code but good luck with that.

Because he is an expert or have experience it doesn’t mean he is 100% right!

He does with how this game was built which is what I was getting at. I was not talking about just his experience with the subject matter alone. Not all games receive the same boost in performance when upgrading to a higher DX version.

I never once claimed all games will get the same performance increase I proofed with those links that games will benefit from newer DX versions as the links clearly show. No matter how much of an expert or how much experience you claim the Dev is with the game engine etc always 50/50 chance he could be wrong. I don’t believe everything someone tells me even less so without anything to back it up over the internet. Believe what you want!

Please ignore any of my post and I will ignore any of yours.

“ANet. They never miss an opportunity to miss an opportunity to not mess up.”

Mod “Posts created to cause unrest with unfounded claims are not allowed” lmao

You could always go out and buy an awesome Intel CPU.

Think of it as buying a new next gen console, except it’s useful.

You could always go out and buy an awesome Intel CPU.

Think of it as buying a new next gen console, except it’s useful.

Right! Useful, only…….. if you’re in a position where you need a new CPU you also need a new motherboard and probably a new set of ram to go with that.

Think of it as buying a next gen console except you won’t actually be getting the same benefits, it won’t last nearly as long and will cost 2-3 times more.

Look Mod 2 off topic post where is their forum infractions?

Note: Mod is on war path today, and do I get another one for this post too?

“ANet. They never miss an opportunity to miss an opportunity to not mess up.”

Mod “Posts created to cause unrest with unfounded claims are not allowed” lmao

(edited by JediYoda.1275)

You could always go out and buy an awesome Intel CPU.

Think of it as buying a new next gen console, except it’s useful.

Right! Useful, only…….. if you’re in a position where you need a new CPU you also need a new motherboard and probably a new set of ram to go with that.

Think of it as buying a next gen console except you won’t actually be getting the same benefits, it won’t last nearly as long and will cost 2-3 times more.

Except all of that is wrong.

JediYoda, you need to learn what exactly is an API, what it does, what parts of the rendering it affects.

Instead of linking such amount of links claiming what they say is more accurate than the words of a dev that has worked on this game, you could spend the time reading Microsoft DX12 articles, which explain exactly how the features are.

I mean, it is easier for all of us you learn why the api has that little effect in gw2 performance issues, and not try to explain you it…

PD: The second link you use to “prove” dx upgrade bring better performance is actually showing a decrease in dx11 over dx9.

At least, make sure all your arguments are consistent.

i7 5775c @ 4.1GHz – 12GB RAM @ 2400MHz – RX 480 @ 1390/2140MHz

(edited by Ansau.7326)

JediYoda, you need to learn what exactly is an API, what it does, what parts of the rendering it affects. Instead of linking such amount of links claiming what they say is more accurate than the words of a dev that has worked on this game, you could spend the time reading Microsoft DX12 articles, explaining exactly which are the features.

I mean, it is easier for all of us you learn why the api has that little effect in gw2 performance issues, and not try to explain you it…

PD: The second link you use to “prove” dx upgrade bring better performance is actually showing a decrease in dx11 over dx9.

At least, make sure all your arguments are consistent

reported for being rude and attacking me, and I will tell you what I was told

Moderator Note:

We expect all community members to be respectful of one another. Insulting or being rude to other community members for any reason is not allowed. Posts that attack another member, single out a player for ridicule, or accuse someone of inappropriate behavior will be removed without notice. If someone is breaking the rules, instead of replying in kind, please report the post so the moderators can review it for possible action. Continued posting of this kind can result in suspension or termination of your forum privileges..

“ANet. They never miss an opportunity to miss an opportunity to not mess up.”

Mod “Posts created to cause unrest with unfounded claims are not allowed” lmao

(edited by JediYoda.1275)

more bugs with better details? XD

now on serious note, DX12 came to fix alot of performance issues, wonder how gw2 wvw would behave or if would receive any kinda of improvement.

For sure they have a department doing that or did.

But what really is required to update a games DX version? Just update the DX API’s or the game engine itself as a whole,? I want links to said information not speculation, Because few game are set to get DX12 in patches.

Seems you’ve forgotten one of the most important links on your crusade about DX12’s glorious performance…

https://en.wikipedia.org/wiki/DirectX

Microsoft DirectX is a collection of application programming interfaces (APIs) for handling tasks related to multimedia, especially game programming and video, on Microsoft platforms. Originally, the names of these APIs all began with Direct, such as Direct3D, DirectDraw, DirectMusic, DirectPlay, DirectSound, and so forth.

As that implies, you don’t just ‘replace some files and recompile something from DX_ to DX12’. You refactor or reconstruct everything that has any reference to functionality provided by DirectX (not just graphics) that’s changed or deprecated, then ensure that no other component in your entire system behaves differently after doing so. Turns out in a game engine more complex than Minesweeper, it’s an incredible amount of work.

For performance; it was already said. Everyone arguing DX11 (and 12) knows one of the most noticable improvements comes from multithreaded rendering – or being able to keep deferred device contexts on many threads instead of just one. Turns out you can use this knowledge for some basic profiling. Doesn’t take much more than some creative thinking and a debugger to start on this.

http://i.imgur.com/dgwY5Es.png

In practice it takes a bit more work than the above as D3DCreate9 will only get you a device context. What you need to do after is find and put breakpoints on ::BeginScene or ::EndScene routines in that context which are guaranteed to be called once and only once per frame in the renderer loop. Or find yourself a proxy/debug D3D9.dll to skip that part. When you find the thread that does the drawing, you can compare it’s ID in something like ProcessExplorer and see that, like Johan said, it is not the bottleneck. It’s the 2nd or 3rd thread on that list. Which means that, if you consider a super simple pipeline like this,

http://i.imgur.com/L2H4Aky.png

reducing the DX9 GPU drawing overhead will have zero impact on how long the underlying CPU calculations that prepare the frame take, and therefore, zero impact on your observed FPS in the CPU-bottlenecked case of GW2.

So please… can we let these DX11/DX12 threads die already…

From someone with knowledge about the game engine and what it can and can’t do.

https://www.reddit.com/r/Guildwars2/comments/3ajnso/bad_optimalization_in_gw2/csdnn3n

Or just read that if you have no understanding of what I typed out above.

I hope everyone knew that this would be a lot of work to rewrite the engine when we started this discussion, I assumed that was common knowledge. I think that it is, in spite of the effort required, the right thing to do if the devs want this game to last a lot longer. This doesn’t need to stop at dx12 either. Objects aren’t thread-safe? Well then rewrite them to support multithreading!

I will admit, I did not know the render thread was not the typical bottleneck with gw2. So perhaps this dx12 discussion is outdated. I apologize. The fact still stands that gw2 needs optimization if it is going to last another few years with this size playerbase. And to be honest, I hope it does. I would like to be able to finish off all the dragons before we get a gw3 (or, god forbid, anet/ncsoft give up the franchise as a lost cause).

Contact me and see if you are eligible for Council of Dusk [Dusk]

Has anyone noticed that gw2 seems to rely much more on the CPU than the GPU? Are you noticing a lack of multi-core utilization? Wish gw2 could run a bit better? There is an answer to these questions…

Vote for DirectX12 support!

Too long has gw2 been confined to the ancient limitations of dx9. It is time to make your voice heard! If ANET were to rewrite gw2 in dx12, we would see massive performance gains! Why confine the game to a single core when we can use all the cores? Dx12 has massive gains over dx11, imagine what the gain over dx9 would be!

I want to see the marvelous world that ANET has sculpted better than ever before. I want to be able to use the full power of my system to make it sharp as it can be.

Are you with me?

Are you and the seriously minute minority going to singlehandedly pay anet’s staff working on transitioning from dx9 to dxwhateverthehellitisnow? Are you also going to pay for the entire budget of said undertaking? Performance is NOT the reigning factor currently ruling against you. It’s the fact that the cost of such an undertaking has a seriously underwhelming boost in profit for anet. If GW2 was on a subscription model, then MAYBE they’d have migrated to a different DirectX version by now.

So… yea, not gonna happen. Stop asking.

Would DX12 improve game sales?

No?

Why would they do it then?

MSI GTX 1080 Sea Hawk EK X 2xSLI 2025 / 11016 MHz, liquid cooling custom loop.

Samsung 850 Evo 500 GB. HTC Vive.

Would DX12 improve game sales?

No?Why would they do it then?

Good point. But what if ANet want to expand the audience and port GW2 to Xbox One which now suppose a Windows 10 machine?

Wouldn’t be possible with the current game engine design. The per core performance in the XBOne and PS4 are a third of the performance of an Intel core. At that point they might as well start with an existing multiplatform engine and fit the game to it rather than trying to build a multiplatform engine from scratch. Of course that may mean the some of the systems we enjoy today may have to change to fit an existing engine.

RIP City of Heroes

Main issues for a console port are royalties in game sales, upgrade system with a lot of difficulties with anet upgrade policy and the fact you have to use Sony and Microsoft servers, which would be separated from pc/mac (that means eu and na also for each console).

i7 5775c @ 4.1GHz – 12GB RAM @ 2400MHz – RX 480 @ 1390/2140MHz

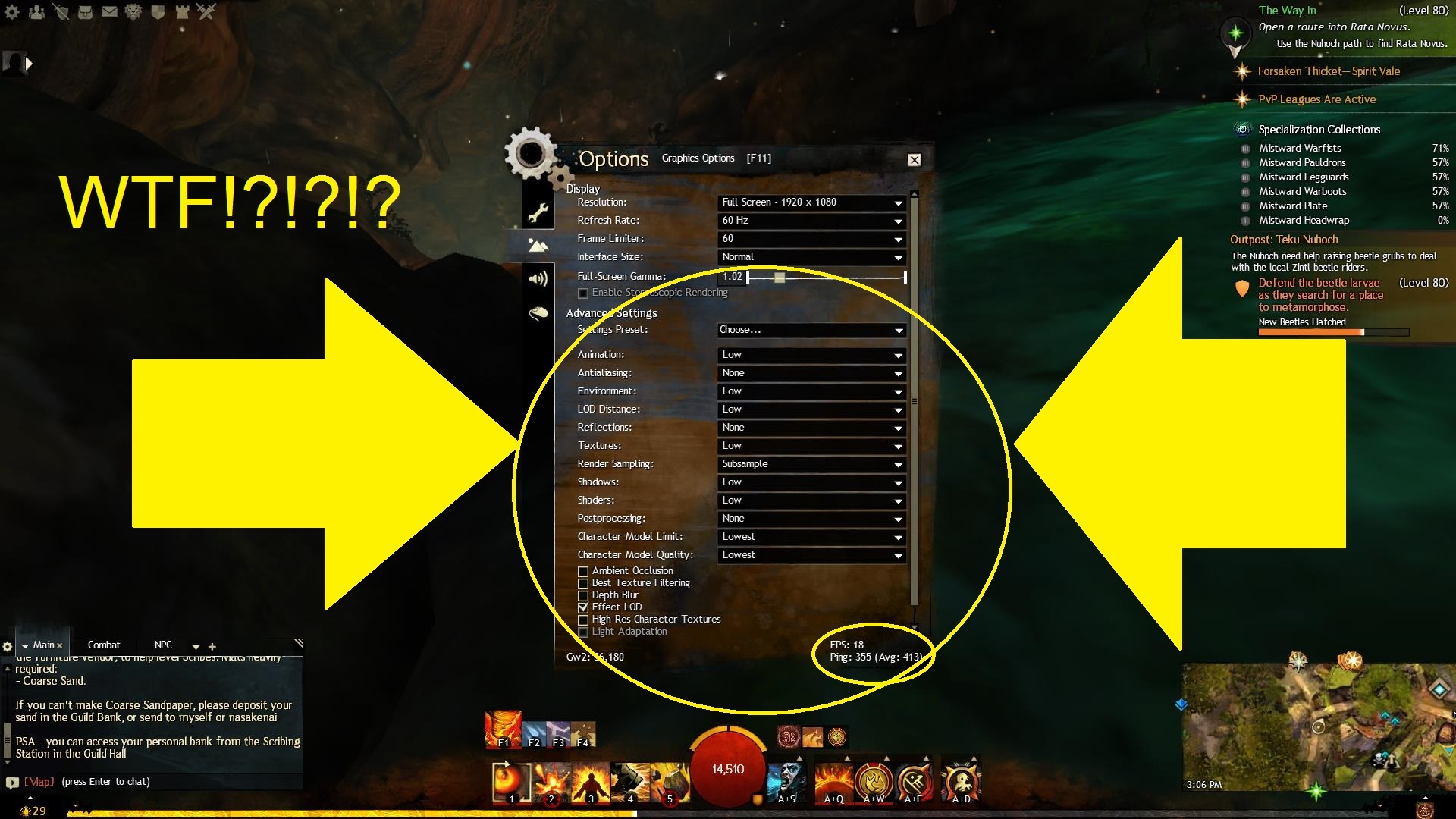

So THIS happened today. It just reaffirms my vote for DX12. I don’t get why GW2 is running like crap, but Witcher 3 (high, hairworks off, low shadows and water) still runs at 60fps… SMH

Planning on upgrading to a GTX980ti by late 2016

So THIS happened today. It just reaffirms my vote for DX12. I don’t get why GW2 is running like crap, but Witcher 3 (high, hairworks off, low shadows and water) still runs at 60fps… SMH

More a problem with your computer than the game.

i7 5775c @ 4.1GHz – 12GB RAM @ 2400MHz – RX 480 @ 1390/2140MHz

It won’t happen anytime soon simply because Windows 10 isn’t even widely used yet. If only a small percentage of people wants to upgrade to Windows 10 (even if it’s mandatory downloaded in the background offered for free by Microsoft) then logic dictates that they don’t even care about DirectX 12.

It’s not practical to throw resources on tech that doesn’t cater to majority of your target market.

So THIS happened today. It just reaffirms my vote for DX12. I don’t get why GW2 is running like crap, but Witcher 3 (high, hairworks off, low shadows and water) still runs at 60fps… SMH

More a problem with your computer than the game.

How so? I had an average of 50fps the night before, and all I did today was change my CPU cooler to a stronger one, and the problem started happening on it’s own. It eventually went up after switching to the Beta 64bit Client, but it still spikes randomly.

Planning on upgrading to a GTX980ti by late 2016

im not greedy

i only want anet to have multi core support, the rest can wait

Henge of Denravi Server

www.gw2time.com

So THIS happened today. It just reaffirms my vote for DX12. I don’t get why GW2 is running like crap, but Witcher 3 (high, hairworks off, low shadows and water) still runs at 60fps… SMH

So how many big zerg fight with or against other players over the internet are there

in the Witcher where you still have that performance ? Oh … wait … there are none .. right ?

I don’t understand why people all the time compare apple with oranges. Has noone

ever played for example Lineage 2 with Unreal Engine or AION with CryTech Engine

and seen how this o so good single-player / shooter engines suddenly give you only

a slideshow as soon as you have massive amounts of players in an MMO ?

And to your problem .. make sure your GPU uses all 16 PCIe lanes. Reseating you

GPU mostly helps.

im not greedy

i only want anet to have multi core support, the rest can wait

They have multicore support, only not for more than 4 cores.

Best MMOs are the ones that never make it. Therefore Stargate Online wins.

(edited by Beldin.5498)

So THIS happened today. It just reaffirms my vote for DX12. I don’t get why GW2 is running like crap, but Witcher 3 (high, hairworks off, low shadows and water) still runs at 60fps… SMH

So how many big zerg fight with or against other players over the internet are there

in the Witcher where you still have that performance ? Oh … wait … there are none .. right ?I don’t understand why people all the time compare apple with oranges. Has noone

ever played for example Lineage 2 with Unreal Engine or AION with CryTech Engine

and seen how this o so good single-player / shooter engines suddenly give you only

a slideshow as soon as you have massive amounts of players in an MMO ?And to your problem .. make sure your GPU uses all 16 PCIe lanes. Reseating you

GPU mostly helps.im not greedy

i only want anet to have multi core support, the rest can wait

They have multicore support, only not for more than 4 cores.

To be fair, I tested both in areas where the density would be low. GW2 in the tunnel before Rata Novus, and TW3 in Kaer Morhen. I only used TW3 as an example, because it’s the most taxing game I have in my library.

Planning on upgrading to a GTX980ti by late 2016

I know some areas in GW2 where i can get a maximum of 170 FPS with my old if 2500k.

Best MMOs are the ones that never make it. Therefore Stargate Online wins.

Found the problem. Apparently, the new revison of Riva isn’t compatible with GW2…I’m gonna have to slum it with the rest of the denizens and check my FPS the old fashioned way now.

Planning on upgrading to a GTX980ti by late 2016

Hot is a massive success, but that has nothing to do with DX12. I already get 60fps at max gfx with a modest system, so an extra x% is a total waste of money, I will have more content pls.

“Trying to please everyone would not only be challenging

but would also result in a product that might not satisfy anyone”- Roman Pichler, Strategize

im not greedy

i only want anet to have multi core support, the rest can wait

They have multicore support, only not for more than 4 cores.

nope is only two cores and anet staff already verified that the game indeed is badly optimized to the point that even though it appear to be utilizing two cores, a lot of the things are done on one thread thus it is mainly one core that doing the job.

also…multi cores as in…cpu scaling

gw2 was developed in the old era that still uses 2 cores….

however, dev can no longer keep using two cores as the core speed no longer increases, what continue to increase is the number of cores we have on our processor. even intel made a statement to all game dev to start using the cores and not depend on single core performance, i guess intel also reaching their limit on single core performance

Henge of Denravi Server

www.gw2time.com

Forgot to add to my post above – making your engine compatible with DX_ was only step 1. Step 2 would inlcude change/redesign and retest again for each new feature of DX_ that you want to take advantage of.

Objects aren’t thread-safe? Well then rewrite them to support multithreading!

Thread-safe doesn’t mean faster or more efficient. On one hand, it can be used across threads without worrying about race conditions, but on the other, the semaphores and locks used to make this happen can result in serious performance penalities even when threaded.

Likewise, for everyone wanting GW2’s workload to be spread over more than the 3-4 cores it is now (just look at processexplorer while playing…), ANet knows (reddit):

There are conscious efforts in moving things off the main thread and onto other threads (every now and then a patch goes out that does just this), but due to how multi-threading works it’s a non-trivial thing that take a lot of effort to do. In a perfect world, we could say “Hey main thread, give the other threads some stuff to do if you’re too busy”, but sadly this is not that world.

Think of each frame like a dependency tree… you have to calculate what you see to know what to draw, you have to know what to draw to know what assets to load, you have to know what to draw to know what effects can be culled etc. No amount of crying about threads and cores will change that. Those dependencies must be done in that order, and in the case of GW2, that’s a pretty tall (serial) tree compared to something wide (parallel) like video encoding. Yes, sometimes things end up on that tree that don’t need to, and if they happen to be on the longest path, can result in some small gains after being moved off. I’m sure they also have ideas for better parellel designs than what it ended up being. But, like shipping with a new DX, design changes of that scale are not likely to be worth the effort this far into a game’s lifespan.

im not greedy

i only want anet to have multi core support, the rest can wait

They have multicore support, only not for more than 4 cores.

nope is only two cores and anet staff already verified that the game indeed is badly optimized to the point that even though it appear to be utilizing two cores, a lot of the things are done on one thread thus it is mainly one core that doing the job.

also…multi cores as in…cpu scaling

gw2 was developed in the old era that still uses 2 cores….

however, dev can no longer keep using two cores as the core speed no longer increases, what continue to increase is the number of cores we have on our processor. even intel made a statement to all game dev to start using the cores and not depend on single core performance, i guess intel also reaching their limit on single core performance

Just take a look into your task manager. If only 2 cores are used a i5 would not

even run at 50%.

Best MMOs are the ones that never make it. Therefore Stargate Online wins.

Has anyone noticed that gw2 seems to rely much more on the CPU than the GPU? Are you noticing a lack of multi-core utilization? Wish gw2 could run a bit better? There is an answer to these questions…

Vote for DirectX12 support!

Too long has gw2 been confined to the ancient limitations of dx9. It is time to make your voice heard! If ANET were to rewrite gw2 in dx12, we would see massive performance gains! Why confine the game to a single core when we can use all the cores? Dx12 has massive gains over dx11, imagine what the gain over dx9 would be!

I want to see the marvelous world that ANET has sculpted better than ever before. I want to be able to use the full power of my system to make it sharp as it can be.

Are you with me?

You know that you can’t just throw GW2 on DX12. They’d probably have to rewrite the game they’ve taken 3 years +5 years of pre-launch to create.

Would DX12 even touch any of the real performance issues?

Not to mention the server issues..

im not greedy

i only want anet to have multi core support, the rest can wait

They have multicore support, only not for more than 4 cores.

nope is only two cores and anet staff already verified that the game indeed is badly optimized to the point that even though it appear to be utilizing two cores, a lot of the things are done on one thread thus it is mainly one core that doing the job.

also…multi cores as in…cpu scaling

gw2 was developed in the old era that still uses 2 cores….

however, dev can no longer keep using two cores as the core speed no longer increases, what continue to increase is the number of cores we have on our processor. even intel made a statement to all game dev to start using the cores and not depend on single core performance, i guess intel also reaching their limit on single core performanceJust take a look into your task manager. If only 2 cores are used a i5 would not

even run at 50%.

……

Since when i5 has hyper threading to 8 cores. You sure you know your stuffs?

Henge of Denravi Server

www.gw2time.com

I don’t. Why lock to one system.

Opengl please.

(If you tell me that mac client is not locking to one system all it is is a wrapper for the windows client (Even I hate mac))

I am not sure that we do.

How much would it cost?

Where does that money come from?

How is that cost to be reclaimed?

How much content could have been produced for that cost?

DX12 also requires Windows 10 so any players who would want to see any supposed benefits from it would have to upgrade if they don’t already have it.

I want OpenGL not DX12! give us OpenGL!!!!

City of Heroes was OpenGL. It was a forum support nightmare because both AMD and especially nVidia had issues with their OpenGL in the home drivers rather than the professional series of video cards. Times when it was working only to get broken again during the next beta version, which players switched too because new Dx game that came out so they had to choose between latest driver for latest Dx game they got or a stable OpenGL driver for their MMO. Trust me, it wasn’t fun times. Too many gamers upgrade drivers as reflex reaction as soon as a new set comes out.

RIP City of Heroes

To me it sounds like you expect DX12 to magically solve any problems around..

This feels like 1995 to me when Microsoft jumped on the DooM hype and released DooM95 – running DooM on DirectX and on pseudo-32-bit increasing RAM requirements. While the game ran fine on 16-bit MS-DOS without DX….

20 years later…

and politically highly incorrect. (#Asuracist)

“We [Asura] are the concentrated magnificence!”

So THIS happened today. It just reaffirms my vote for DX12. I don’t get why GW2 is running like crap, but Witcher 3 (high, hairworks off, low shadows and water) still runs at 60fps… SMH

Maybe the problem is at you’re end.

It most likely is a problem on my end. I’m gonna bite the bullet and upgrade my rig prematurely (cause I schedule my upgrades).

Planning on upgrading to a GTX980ti by late 2016

So THIS happened today. It just reaffirms my vote for DX12. I don’t get why GW2 is running like crap, but Witcher 3 (high, hairworks off, low shadows and water) still runs at 60fps… SMH

I got some RAM and worked just fine … 4Gb no longer cuts it sadly … 8Gb does.

how many customers use windows 10? maybe 20%

vulkan is cross platform.

I want OpenGL not DX12! give us OpenGL!!!!

And i want Glide .. 3dfx Vodoo cards were so much better than NVidia crap at

their times

im not greedy

i only want anet to have multi core support, the rest can wait

They have multicore support, only not for more than 4 cores.

nope is only two cores and anet staff already verified that the game indeed is badly optimized to the point that even though it appear to be utilizing two cores, a lot of the things are done on one thread thus it is mainly one core that doing the job.

also…multi cores as in…cpu scaling

gw2 was developed in the old era that still uses 2 cores….

however, dev can no longer keep using two cores as the core speed no longer increases, what continue to increase is the number of cores we have on our processor. even intel made a statement to all game dev to start using the cores and not depend on single core performance, i guess intel also reaching their limit on single core performanceJust take a look into your task manager. If only 2 cores are used a i5 would not

even run at 50%.……

Since when i5 has hyper threading to 8 cores. You sure you know your stuffs?

50% of 4 are 2 .. don’t know why its 8 for you.

Best MMOs are the ones that never make it. Therefore Stargate Online wins.

(edited by Beldin.5498)

how many customers use windows 10? maybe 20%

Vulkan is cross platform.

Yeah Vulkan might be better. DirectX12 is mentioned more often simply because it’s more famous.

DX12 also requires Windows 10 so any players who would want to see any supposed benefits from it would have to upgrade if they don’t already have it.

Comments like this prove that you are just full of it. Adding DX12 does not in any way mean that players are forced to use it, which would lead to your idea that players are forced to have win 10. Many games have multiple support for dx versions and when they add support for a new version there is not one that I know of that no longer supports the original version. The only reason it would become a win 10 exclusive is because arenanet are morons. Which I don’t think they are.

Ya want to say the devs are not competent enough to add this support or that arenanet/Ncsoft are too cheap to invest in the game fine. But please stop with the FUD. Honestly there is no reason to not do this. As it won’t hurt anything and it won’t force anything. It just adds options. Not sure why anyone is against options. I don’t expect it today but if they allocated just alittle bit of resources it could happen 4th quarter 2016 or some time 2017.

DX12 also requires Windows 10 so any players who would want to see any supposed benefits from it would have to upgrade if they don’t already have it.

Comments like this prove that you are just full of it. Adding DX12 does not in any way mean that players are forced to use it, which would lead to your idea that players are forced to have win 10. Many games have multiple support for dx versions and when they add support for a new version there is not one that I know of that no longer supports the original version. The only reason it would become a win 10 exclusive is because arenanet are morons. Which I don’t think they are.

Ya want to say the devs are not competent enough to add this support or that arenanet/Ncsoft are too cheap to invest in the game fine. But please stop with the FUD. Honestly there is no reason to not do this. As it won’t hurt anything and it won’t force anything. It just adds options. Not sure why anyone is against options. I don’t expect it today but if they allocated just alittle bit of resources it could happen 4th quarter 2016 or some time 2017.

DX12 is Windows 10 exclusive. Do see any benefits from DX12, players will have to be using Windows 10. That exclusivity is because Microsoft made it so. Take it up with them if you have an issue with it rather than blame Anet.

http://m.windowscentral.com/directx-12-will-indeed-be-exclusive-windows-10

I also do not get where you got the idea that players would be forced to use DX12. Nobody has been saying that from what I can recall.

Also changing the system to allow DX12 has costs. Not everything is worth it and especially if adding DX12 will not solve the problems that people have been complaining about.

(edited by Ayrilana.1396)

how many customers use windows 10? maybe 20%

vulkan is cross platform.

I have high hopes for vulkan, whenever it will be truly available for end users. The predecessor of Mantle + OpenGL combined, if you will. Big names in the industry like Valve seems to favor it over DX12. We’ll see how things turns out.

I want OpenGL not DX12! give us OpenGL!!!!

And i want Glide .. 3dfx Vodoo cards were so much better than NVidia crap at

their timesim not greedy

i only want anet to have multi core support, the rest can wait

They have multicore support, only not for more than 4 cores.

nope is only two cores and anet staff already verified that the game indeed is badly optimized to the point that even though it appear to be utilizing two cores, a lot of the things are done on one thread thus it is mainly one core that doing the job.

also…multi cores as in…cpu scaling

gw2 was developed in the old era that still uses 2 cores….

however, dev can no longer keep using two cores as the core speed no longer increases, what continue to increase is the number of cores we have on our processor. even intel made a statement to all game dev to start using the cores and not depend on single core performance, i guess intel also reaching their limit on single core performanceJust take a look into your task manager. If only 2 cores are used a i5 would not

even run at 50%.……

Since when i5 has hyper threading to 8 cores. You sure you know your stuffs?50% of 4 are 2 .. don’t know why its 8 for you.

Seriously, are you even reading your comment? You even quoted your own comment. This is exactly what you wrote yourself If only 2 cores are used a i5 would not even run at 50%.

/facepalm

Also, you are the one that said gw2 is capable of using up to 4 cores.

/facepalm2

Henge of Denravi Server

www.gw2time.com

(edited by SkyShroud.2865)

The problem is quite visible if you look at it properly as a programmer I get it it’s a huge undertaking to re-write code, but that’s more of a microsoft problem they should be writing APIs that do NOT require complete re-writes of code period.

Optimization is one thing, the art department of ArenaNET creating content the engine cannot cash is another which is the real problem you have. It’s one thing to have the best graphics (I’ve personally seen in an MMORPG) but what good are those things if the art department hasn’t a clue about optimizing the content they create, it feels and looks at times that the directors have just the art department go crazy and haven’t forced limitations on what they are allowed to create.

These thoughts come from someone who has ZERO problems playing the ‘core’ game on a laptop HD4000 with 8gb ram, but when it comes to Dry Top / Silverwastes & HoT it hasn’t a hope, because it feels / looks like they haven’t even tried to pull the art department in they need a reminder of what the minimum specs actually are I think.

I don’t mind eventually I’ll get a rig that will run GW2 in all areas fine, here is hoping a GTX970 4Gb DDR5 will put the game in it’s place with the aid of an i7 6300, and if that doesn’t do it, well it’s time to pack my bags and just live under a rock.