Will the Optimization help AMD CPU's ?

is there actually optimization for AMD cpu coming?

well there was a thread somewhere or other of optimization overall to improve how the game is running i’m sure this will affect how AMD systems are running too.

Your best bet with AMD cpus is to overclock them as much as you can safely.

There will never be optimization for AMD specific cpu’s. They just have bad single thread performance.

intel 335 180gb/intel 320 160gb WD 3TB Gigabyte GTX G1 970 XFX XXX750W HAF 932

Eh that’s only partially true. The game is just unoptimized for multicore performance plain and simple. The next iteration of AMD’s processor ( Steamroller/ Kaveri) are due out at some point by the end of this year, with a rumoured 25 – 30% increase in single threaded IPC, which unfortunately is the problem with this game, so the solution might lie there.

The truth is we don’t know. All we do know is that AMD has the next gen consoles locked up, so a lot of developers are going to be dragged kicking and screaming into better multi-core support, HSA programming languages, and using low level API’s such as Mantle. That being said unless we get a miraculous 64 bit client with multi-threaded support ( hey it happened to WoW 4 years after the game was released) or Arenanet decides to port the game to one of (if not both) the PS4 or Xbox One, I highly doubt we will see much in the way of an increase when it comes to bottomed out FPS performance.

Trust me, I got a custom looped FX-8350 sitting at 4.7 Ghz to 5.0 Ghz at at any given time and my Gtx 670 will net me at best 82% usage and at worst 52% usage with frames peaking at 110FPS and bottoming out at 12FPS. So I’m in the same boat, and believe me when I say I’ve done all I can on my end to optimize this game. The rest is up to Arena net. So the best bet at this point is to just wait and see and cross your fingers that we don’t have to wait for Kaveri’s substantial single threaded IPC improvements to help us get better frames.

(edited by yawa.5103)

haha so basically got a heap of junk CPU (which i just bought) to play this game and umm yeah i can’t play fx series work pretty well on most other games its a shame guess i will be saying goodbye to guild wars lol maybe come back if i ever get a new CPU or they make use of more cores

And what CPU specifically do you have? And what kind of framerates?

I have a Phenom II X4 processor, and a laptop with a A10-4600M and GW2 plays “reasonably” on them.

FX-8350. Plays most things well aside from zergs.

I have an Fx-8150 with only a small factory overclock, 16G RAM, and a single AMD Radeon 6970 (MSI Twin Frozr II Series)

I can run the game almost everywhere at 40-65fps in the highest possible detail, and run at about 30fps in zergs. I pretty much have no problems. Not sure what you guys are complaining about.

Parashadow (Mesmer)

WvW Mesmer Commander

How the heck do you have 30 FPS in zergs? It takes a huge overclock on an intel i7 3930K to get 30 FPS in a zerg. Unless the zerg is small I think you’re blowing smoke there.

AMD FX-8320 runs fine too with boost enabled.(4.0Ghz, gonna do “real” overclock to 4.0Ghz whenever i got a better cooler ) Only zergs 15 FPS

) Only zergs 15 FPS

Ganking ur ether running ‘lowest’ on the culling settings and/or u just have not been in a truely large eough zerg to see how bad performance gets.

Hell some battles that could be considered zergs, i can easily sit around all day at 45+ FPS. But in comparison to the zergs that net 20-25 FPS, those other zergs are tiny.

Yes i could increase those figures by 10 fps or so if i run ‘lowest’ on the culling settings, but by that point u have effectivly turned a large zerg into a small one by culling out over half the people on the screen, and those left are all green and it looks like crap.

Instead i run highest on the culling character limit so i can see every single one of the people in the zrg, and medium/low on the Culling Texture limit so atleast those people near me are fully textures and those further away are green. That plus my system nets FPS figures as low as 20-25 in MASSIVE EB zergs.

AMD’s mantle API would , if what we have been told by AMD is to be beleved, do wonders for GW2, problem is that ALOT of work on Anet side to move GW2 from DX9 to Mantle.

EVGA GTX 780 Classified w/ EK block | XSPC D5 Photon 270 Res/Pump | NexXxos Monsta 240 Rad

CM Storm Stryker case | Seasonic 1000W PSU | Asux Xonar D2X & Logitech Z5500 Sound system |

(edited by SolarNova.1052)

What yawa and Solar said are 100% correct.

I just don’t think that we will ever see any performance based improvements over the games lifespan.

The devs have told us that we are going to get qol improvements for performance in the next little while but I have seen neither hide nor hair of that.

I also don’t think that they are capable of fixing the issue. The current engine cannot support the content being delivered.

intel 335 180gb/intel 320 160gb WD 3TB Gigabyte GTX G1 970 XFX XXX750W HAF 932

How the heck do you have 30 FPS in zergs? It takes a huge overclock on an intel i7 3930K to get 30 FPS in a zerg. Unless the zerg is small I think you’re blowing smoke there.

Well I am on anvil rock so its probably a little smaller than what your used to. But not too small.

Parashadow (Mesmer)

WvW Mesmer Commander

i have the 8320 and it does run this game ok in open world PVE but even for 8v8 PVP frames drop up to 20 or so and world bosses slide shows of 8fps i may aswell have a dual core with no graphics card and will see similar results for world fights lol

My pc has gone back atm they think it may well be underperforming slightly but pffft who knows !

So far as I’ve seen, the only way people have gotten ‘great’ fps with an AMD FX 8 core was when it had a monstrous overclock.

I have an 8350 stock clock, and a 7950 video card, I got about 39fps in LA and on Tequatal zerg I was getting in the 20’s. I have everything turn on high except for Render Sampling at Native and Character Model Limit set to Low (I dont care about seeing people).

Eh that’s only partially true. The game is just unoptimized for multicore performance plain and simple. The next iteration of AMD’s processor ( Steamroller/ Kaveri) are due out at some point by the end of this year, with a rumoured 25 – 30% increase in single threaded IPC, which unfortunately is the problem with this game, so the solution might lie there.

My word. If this is true, I may think about getting a new CPU/Mobo sooner then expected.

Anywho, any optimizations that made the game run better would of course cause an improvement for AMD CPUs. However, anything short of much better multithreading most likely wouldn’t help excessively, because of AMD’s relatively poor single core performance.

EGVA SuperNOVA B2 750W | 16 GB DDR3 1600 | Acer XG270HU | Win 10×64

MX Brown Quickfire XT | Commander Shaussman [AGNY]- Fort Aspenwood

Will the Optimization help AMD CPU's ?

in Account & Technical Support

Posted by: Kendra Nightwind.8734

I am not so sure that it is an AMD performance issue. My wife and I have almost the same system, the main difference is that I have an AMD 6950 2GB card and a Sound Blaster X-FI sound card and she is using on board sound with a GTX 460 1GB. About five weeks ago, I had no video problems at all, consistantly 30+FPS (I think I was in a zerg once where the FPS dropped to 20FPS). When SAB was reintroduced into the game suddenly my FPS dropped to 7 (and that was in performance mode!). From what I read they did some optimizations to the sound sub-system (F-Mod) when the released SAB. Since then my performance has improved, my average FPS is not upto 15FPS using performance settings.

I am not a happy campter at all. What ever they did, I would like them to either undo it or fix it. I think what we really need is a properly optimized multi-threaded 64bit game client. I am not sure we actually need a 64bit client, but I can hope.

If by AMD Optimization the OP means support for more than 4 cores then I would lean toward the no column. Games aren’t as easily subdivided into similar tasks that by throwing more cores at it’s performance will scale linearly. This isn’t video compression, ray tracing or any other task where the same block of code can be spun off to N-1 cores with a supervisor thread overseeing coordination. Plus there are considerably fewer true six and eight core CPUs than there are four (no most i7s are still four cores).

If by AMD Optimization the OP means the discussion a few weeks back that brought up the Intel compiler and optimized library incident from a few years ago, where Intel’s compiler actively refuse to acknowledge when any non-Intel CPU supported any streaming instruction set beyond SSE2 so the libraries used for advance math algorithms like FFT would always perform worse. Well I doubt there is much SSEx code in the game and that ArenaNet would choose to use Intel’s compiler. However Intel is known to analyze software to determine the frequency of which instructions are used including sequences of instructions and with that knowledge optimize their next generation CPU to perform those instructions or series of instructions just a little faster than before. That’s what helps determines the arrangement and number of execution units in the core to improve Intel’s superscaling and HT effectiveness.

Now general high level code optimization will benefit both Intel and AMD equally.

RIP City of Heroes

(edited by Behellagh.1468)

I’m playing with a FX-4100 and HD 6870 and it works fine. I’ve only seen problems in WvW and champ zergs.

haha so basically got a heap of junk CPU (which i just bought) to play this game and umm yeah i can’t play fx series work pretty well on most other games its a shame guess i will be saying goodbye to guild wars lol maybe come back if i ever get a new CPU or they make use of more cores

You purchased a pre built one and didn’t do your homework, eh? Live and learn…

How the heck do you have 30 FPS in zergs? It takes a huge overclock on an intel i7 3930K to get 30 FPS in a zerg. Unless the zerg is small I think you’re blowing smoke there.

Indeed. Want to test real zergs, go up to T1 and zone into stonemist lord room on reset when the 3 big hounds of SOR, BG and JQ are stacked on top of one another, haha.

How the heck do you have 30 FPS in zergs? It takes a huge overclock on an intel i7 3930K to get 30 FPS in a zerg. Unless the zerg is small I think you’re blowing smoke there.

Indeed. Want to test real zergs, go up to T1 and zone into stonemist lord room on reset when the 3 big hounds of SOR, BG and JQ are stacked on top of one another, haha.

I remember doing that once. I swear I heard my computer screaming at me and cursing fowl things.

If I could ask a dev anything at this point, it would be what optimizations can we expect at all for those of us running any processor at this point.

If WoW 4 years after release in an ancient, inefficiently coded engine, can manage a much better optimized 64 bit client and correct a lot of lower frame rate issues with it in the process, doing so in this game has to be at least in the realm of doable.

It shouldn’t be too much to ask to have a better standard lower frame rate issues for Pilderver and mid range Haswell ( yes it happens to intel users as well) level processors when half the games appeal is participating in these world events.

As an AMD user running their current highest IPC processor at 5.0 GHz or as an Intel user running an i5 at 4.5 GHz we should not be hoping for 20 fps while getting a best of 18fps in these events, on relatively high end systems. The performance on common mid to high end processors is simply not acceptable for a game of this level of success, market penetration, and resources, especially a dynamically expanding MMO with a 5-10 year life span.

No one should have to wait to brute force their frame rate up in single core performance for a higher IPC processor just to get semi-playable lower frame rates.

I sincerely hope one of these revisions is focused on getting the most out of these mainstream and fairly high end chips.

There’s alot of issues with the Game Client.

1. its 32bit, and can only use 2.8GB to 3.1GB of ram before it crashes (in most cases from what I’ve seen)

2. it can only thread across 6 Cores (Physical, not HT – Tested with a Dual Socket Xeon e5 8core+HT/socket server. Interesting results I would say) – This is what cripples the high end GPUs. If my CPU locks at 80% used while Gw2 is running my GPU will jump to a good 75-80% used (GPU-Z). But if the CPU usage drops down below 68%, then GPU drops to sub 50%. This behavior is weird IMHO.

3. Uses ALOT, and i mean ALOT, of Disk I/O – Was running a couple VirtualBox Images last night updating my Imaging staging area to Win8.1(RTM), and while the install was going on (Force cap at 25MB/s – My Drives will run at 175MB/s) GW2 was sucking up all the rest available I/O. Feels like more of the game should be put into Memory (My system has 32GB of ram, the entire game can fit into the memory!)

There are more, but those are the biggest issues IMHO. I think its time Anet gave us a new client to test with. Use open platform Compiler, make the code 64bit, and search for all the cores and actively thread across them all.

Laptop: M6600 – 2720QM, AMD HD6970M, 32GB 1600CL9 RAM, Arc100 480GB SSD

If by AMD Optimization the OP means support for more than 4 cores then I would lean toward the no column. Games aren’t as easily subdivided into similar tasks that by throwing more cores at it’s performance will scale linearly. This isn’t video compression, ray tracing or any other task where the same block of code can be spun off to N-1 cores with a supervisor thread overseeing coordination. Plus there are considerably fewer true six and eight core CPUs than there are four (no most i7s are still four cores).

If by AMD Optimization the OP means the discussion a few weeks back that brought up the Intel compiler and optimized library incident from a few years ago, where Intel’s compiler actively refuse to acknowledge when any non-Intel CPU supported any streaming instruction set beyond SSE2 so the libraries used for advance math algorithms like FFT would always perform worse. Well I doubt there is much SSEx code in the game and that ArenaNet would choose to use Intel’s compiler. However Intel is known to analyze software to determine the frequency of which instructions are used including sequences of instructions and with that knowledge optimize their next generation CPU to perform those instructions or series of instructions just a little faster than before. That’s what helps determines the arrangement and number of execution units in the core to improve Intel’s superscaling and HT effectiveness.

Now general high level code optimization will benefit both Intel and AMD equally.

At work we have a business system that went through this very same thing. The Application server, was coded to use Intel SSE tech. But we were using AMD virtual hosts (2300 series CPUs at the time) that did not understand how to use the SSE optimizations. so the application went into software rendering mode and pretty much Bombed. Only by upgrading to AMD 6128 CPUs (Later on e5’s) were we able to fully use the compilers optimization.

Using a platform dependent compiler is very painful on the end users/customers. I wish more people would go open platform.

and I really hope that isn’t the case here, for GW2. If it is, it’s just kittened.

Laptop: M6600 – 2720QM, AMD HD6970M, 32GB 1600CL9 RAM, Arc100 480GB SSD

There’s alot of issues with the Game Client.

1. its 32bit, and can only use 2.8GB to 3.1GB of ram before it crashes (in most cases from what I’ve seen)

Not entirely sure on how true this is. I’ve played for at least 5 hours one day at highest settings at 5040×900 resolution (Eyefinity) and did a SB event about 4 hours in, and didn’t crash. I’ve never had a memory-related crash in the past either. It could be possible I just never hit that kind of memory usage, but if that’s true, I fail to see how anyone would reach such usage, unless you take part in large-scale WvW battles for a few hours maybe with no rest…

There are more, but those are the biggest issues IMHO. I think its time Anet gave us a new client to test with. Use open platform Compiler, make the code 64bit, and search for all the cores and actively thread across them all.

I do agree with this though. However, it seems GW2 currently uses Visual Studio’s built-in compiler (I could be wrong, but I checked with multiple programs and Linux), which is pretty unbiased from my understanding. Worse-case scenario would be GW2 using Intel’s compiler… in which case I’d imagine AMD user’s having half the performance they do now. Not sure what other choices for a compiler are available, or how viable they are.

There’s alot of issues with the Game Client.

1. its 32bit, and can only use 2.8GB to 3.1GB of ram before it crashes (in most cases from what I’ve seen)

Not entirely sure on how true this is. I’ve played for at least 5 hours one day at highest settings at 5040×900 resolution (Eyefinity) and did a SB event about 4 hours in, and didn’t crash. I’ve never had a memory-related crash in the past either. It could be possible I just never hit that kind of memory usage, but if that’s true, I fail to see how anyone would reach such usage, unless you take part in large-scale WvW battles for a few hours maybe with no rest…

There are more, but those are the biggest issues IMHO. I think its time Anet gave us a new client to test with. Use open platform Compiler, make the code 64bit, and search for all the cores and actively thread across them all.

I do agree with this though. However, it seems GW2 currently uses Visual Studio’s built-in compiler (I could be wrong, but I checked with multiple programs and Linux), which is pretty unbiased from my understanding. Worse-case scenario would be GW2 using Intel’s compiler… in which case I’d imagine AMD user’s having half the performance they do now. Not sure what other choices for a compiler are available, or how viable they are.

In windows, open Performance Monitor (Task Manager>Performance Tab), goto Memory and look for GW2.exe and watch it while you play. I am curious to what your max Memory usage it for the process, but I seem to crash mostly at SB/WvW and Frozen Maw when there are 50+ Players around and the GW2.exe reaches 2.7GB of ram used.

As for the compiler, it completely depends on the code used to build the application.

Laptop: M6600 – 2720QM, AMD HD6970M, 32GB 1600CL9 RAM, Arc100 480GB SSD

We don’t know what compiler they use. IMO Microsoft’s is fine. Intel’s is fine too as long as the game isn’t using the “optimized” math kernal libraries or performance primatives.

RIP City of Heroes

ANet designed GW2 to run on hardware from 5 years ago, except it doesn’t.

Now we’re stuck with a game that lacks DX 11 support, 64-bit optimization, Multi-core optimization and utilizing more than 3.5 GB of memory.

It’s quite sad really for a PC-only title. Even cross-platform games are seeing more optimization than that.

Optimization? What optimization?

I’ve tried on both AMD and an Intel i7. I was rather disappointed as I thought the i7 would be a huge increase in performance….it wasn’t. Ive accepted the game has serious issues that need to be addressed and have stopped trying to make something work better that never will.

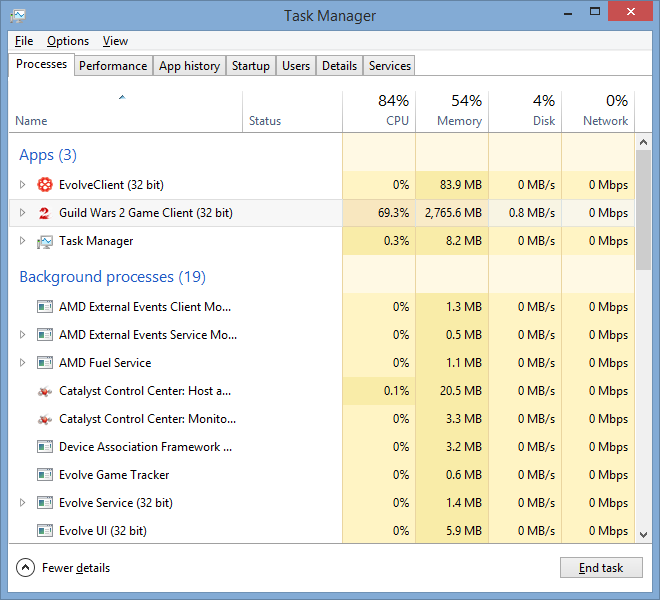

In windows, open Performance Monitor (Task Manager>Performance Tab), goto Memory and look for GW2.exe and watch it while you play. I am curious to what your max Memory usage it for the process, but I seem to crash mostly at SB/WvW and Frozen Maw when there are 50+ Players around and the GW2.exe reaches 2.7GB of ram used.

I attached a screenshot of GW2’s memory usage (exactly 2.7GB) during a SB event at nearly highest settings (only thing not highest is Best Texture Filtering and FXAA). No crash. Been playing for 2-3 hours prior to that event too.

Edit: Oops, Shadows wasn’t highest either it seems; not sure when I turned that down lol

(edited by Espionage.3685)

I’ve tried on both AMD and an Intel i7. I was rather disappointed as I thought the i7 would be a huge increase in performance….it wasn’t. Ive accepted the game has serious issues that need to be addressed and have stopped trying to make something work better that never will.

It definitely isn’t our hardware. If you are running an AMD FX 6 core or 8 core series CPU you should be fine. It is 100% lack of optimization.

I previously benched a few titles and posted them above with my rig ( FX 8350 at 4.8 GHz, GTX 670 boost disabled through bios with a forced 1228 MHz oc and +290mhz on the ram, Samsung 256 GB SSD, and 8 gigs of 1866mhz ram) to give measuring stick to what kind of performance high end AMD users should be getting in GW2, and from those benches I can prove AMD hardware for this game is not the issue here, the issue is in lack of proper CPU optimization. So basically the games frame rate drops and general inconsistency is 100 % on Arenanet in this case.

And for additional giggles I picked up FFXIV Friday, and cranked the settings all the way up to see if I could find another CPU bottle necked , inefficiently threaded, MMO with performance issues, and was pleasantly surprised to find how well coded that game is. My FX 8350 loves it, and in six hours and a few heavily populated fate events, the lowest I dropped in frame rate was 55 fps and averaging 110fps.

Like you just found out by making the jump, I cannot stress enough how little switching to an i5 or an i7 will help you in this game ( though it may help you in others so that has to be considered), so don’t waste your money at this point. Instead we should start an organized movement to pester and petition the devs to at least try to optimize this game for multi-threaded processors ( or at the least, a 64 bit client, as I’ve stated before if WoW can release one four years after release, Arenanet has zero excuses to not at least try to do so), and therefore addressing the biggest glaring issue in this otherwise fantastic game.

Pester them. Get on it.

(edited by yawa.5103)

Interesting post to read. I too am suffering currently, got an AMD Phenom 955 which I appreciate is getting old but all other games run fine at 60+ FPS, makes me a bit sad to think I’ll have to update my System for an MMO, I mean, to play a game like Crysis/Metro I can understand but an MMO?

Interesting post to read. I too am suffering currently, got an AMD Phenom 955 which I appreciate is getting old but all other games run fine at 60+ FPS, makes me a bit sad to think I’ll have to update my System for an MMO, I mean, to play a game like Crysis/Metro I can understand but an MMO?

The very nature of MMOs tends to make them demanding, same with RTS games.

EGVA SuperNOVA B2 750W | 16 GB DDR3 1600 | Acer XG270HU | Win 10×64

MX Brown Quickfire XT | Commander Shaussman [AGNY]- Fort Aspenwood

Interesting post to read. I too am suffering currently, got an AMD Phenom 955 which I appreciate is getting old but all other games run fine at 60+ FPS, makes me a bit sad to think I’ll have to update my System for an MMO, I mean, to play a game like Crysis/Metro I can understand but an MMO?

The very nature of MMOs tends to make them demanding, same with RTS games.

I understand that but I’ve tried almost every mainstream MMO released in the last 10 years and none of them cause me any issues such as this one!

But hey not to say it’s all Anets fault, I am aware I need a PC update, just … need moneys

Interesting post to read. I too am suffering currently, got an AMD Phenom 955 which I appreciate is getting old but all other games run fine at 60+ FPS, makes me a bit sad to think I’ll have to update my System for an MMO, I mean, to play a game like Crysis/Metro I can understand but an MMO?

The very nature of MMOs tends to make them demanding, same with RTS games.

I’ve never played a single RTS game that was demanding on the CPU. Rise Of Legends, C&C, Supreme Commander other games etc.

I’ve tried on both AMD and an Intel i7. I was rather disappointed as I thought the i7 would be a huge increase in performance….it wasn’t. Ive accepted the game has serious issues that need to be addressed and have stopped trying to make something work better that never will.

It definitely isn’t our hardware. If you are running an AMD FX 6 core or 8 core series CPU you should be fine. It is 100% lack of optimization.

I previously benched a few titles and posted them above with my rig ( FX 8350 at 4.8 GHz, GTX 670 boost disabled through bios with a forced 1228 MHz oc and +290mhz on the ram, Samsung 256 GB SSD, and 8 gigs of 1866mhz ram) to give measuring stick to what kind of performance high end AMD users should be getting in GW2, and from those benches I can prove AMD hardware for this game is not the issue here, the issue is in lack of proper CPU optimization. So basically the games frame rate drops and general inconsistency is 100 % on Arenanet in this case.

And for additional giggles I picked up FFXIV Friday, and cranked the settings all the way up to see if I could find another CPU bottle necked , inefficiently threaded, MMO with performance issues, and was pleasantly surprised to find how well coded that game is. My FX 8350 loves it, and in six hours and a few heavily populated fate events, the lowest I dropped in frame rate was 55 fps and averaging 110fps.

Like you just found out by making the jump, I cannot stress enough how little switching to an i5 or an i7 will help you in this game ( though it may help you in others so that has to be considered), so don’t waste your money at this point. Instead we should start an organized movement to pester and petition the devs to at least try to optimize this game for multi-threaded processors ( or at the least, a 64 bit client, as I’ve stated before if WoW can release one four years after release, Arenanet has zero excuses to not at least try to do so), and therefore addressing the biggest glaring issue in this otherwise fantastic game.

Pester them. Get on it.

This guy is absolutely right. +1

Windows 10

Interesting post to read. I too am suffering currently, got an AMD Phenom 955 which I appreciate is getting old but all other games run fine at 60+ FPS, makes me a bit sad to think I’ll have to update my System for an MMO, I mean, to play a game like Crysis/Metro I can understand but an MMO?

The very nature of MMOs tends to make them demanding, same with RTS games.

I’ve never played a single RTS game that was demanding on the CPU. Rise Of Legends, C&C, Supreme Commander other games etc.

Guess what. Developers cripple their own game by enforcing population limits.

And for additional giggles I picked up FFXIV Friday, and cranked the settings all the way up to see if I could find another CPU bottle necked , inefficiently threaded, MMO with performance issues, and was pleasantly surprised to find how well coded that game is. My FX 8350 loves it, and in six hours and a few heavily populated fate events, the lowest I dropped in frame rate was 55 fps and averaging 110fps.

Hmmm, FFXIV, a game with a 1.5 global skill cast and a global server condition tick which happens every second for all conditions

If Anet where to optimized it to FFXIV, then they will end up changing some of the core mechanics of the game such has global condition tracking.

FFXIV made alot of sacrifices. If you are not looking then you are pretty blind

Interesting post to read. I too am suffering currently, got an AMD Phenom 955 which I appreciate is getting old but all other games run fine at 60+ FPS, makes me a bit sad to think I’ll have to update my System for an MMO, I mean, to play a game like Crysis/Metro I can understand but an MMO?

The very nature of MMOs tends to make them demanding, same with RTS games.

I’ve never played a single RTS game that was demanding on the CPU. Rise Of Legends, C&C, Supreme Commander other games etc.

Guess what. Developers cripple their own game by enforcing population limits.

Or to make it more challenging… Games recycle all of the unit looks and graphics. Why? Because individual graphics and looks is what hits the CPU in those kinds of games. A game like Supreme Commander has a max cap of 1000 units and I’ve hit that cap without any sort of hit on the CPU. On my machine I’d probably be able to make 6,000 before I start seeing any hit but no one is ever going to do that.

Population caps in RTS games are to make it more challenging. Like Rise Of Legend’s 300 max cap and Star Wars Empire At War’s cap (which increases with each upgraded space station in every planet/system) RTS game engines are not that crappy that they need a population cap to hide any bad performance. Either your computer sucks… or your computer sucks. In my experience, RTS games have been the most smooth playing genre of videogame. With or without population caps.

Or to make it more challenging… Games recycle all of the unit looks and graphics. Why? Because individual graphics and looks is what hits the CPU in those kinds of games. A game like Supreme Commander has a max cap of 1000 units and I’ve hit that cap without any sort of hit on the CPU. On my machine I’d probably be able to make 6,000 before I start seeing any hit but no one is ever going to do that.

Population caps in RTS games are to make it more challenging. Like Rise Of Legend’s 300 max cap and Star Wars Empire At War’s cap (which increases with each upgraded space station in every planet/system) RTS game engines are not that crappy that they need a population cap to hide any bad performance. Either your computer sucks… or your computer sucks. In my experience, RTS games have been the most smooth playing genre of videogame. With or without population caps.

So you admit there is a population cap…..

Ask yourself this, is there an option to remove the population cap.

Please note that special units may take 2 or more unit supply in some games which make the population less than 1000 in the game

On my machine I’d probably be able to make 6,000 before I start seeing any hit but no one is ever going to do that.

tracking units is an O(n^2) problem. the problem get exponentially worse as n get bigger

1000^2 = 1000000

vs

6000^2 = 36000000

(6000^2 – 1000^2) / 1000^2 = 35

So you increased the load 35 times by adding 6 times more units

(edited by loseridoit.2756)

Look up SC2ALLin1.

Its a mod/crack for StarCraft 2 so u can play it offline. It also happens to be able to set skirmish games up with any population limit u like.

Go test it lol.

There are crack or cheets for almost any RTS game out there, and if population limit is somthign even 1 person may want, theres a crack/cheat for it out there. remember .." THIS IS THE INTERNET !"

EVGA GTX 780 Classified w/ EK block | XSPC D5 Photon 270 Res/Pump | NexXxos Monsta 240 Rad

CM Storm Stryker case | Seasonic 1000W PSU | Asux Xonar D2X & Logitech Z5500 Sound system |

There are crack or cheets for almost any RTS game out there, and if population limit is somthign even 1 person may want, theres a crack/cheat for it out there. remember .." THIS IS THE INTERNET !"

yep, i love mods. The freedom to do everything. I hope that one day Blizzard will post the AI api. Those AI competitions are interesting

Or to make it more challenging… Games recycle all of the unit looks and graphics. Why? Because individual graphics and looks is what hits the CPU in those kinds of games. A game like Supreme Commander has a max cap of 1000 units and I’ve hit that cap without any sort of hit on the CPU. On my machine I’d probably be able to make 6,000 before I start seeing any hit but no one is ever going to do that.

Population caps in RTS games are to make it more challenging. Like Rise Of Legend’s 300 max cap and Star Wars Empire At War’s cap (which increases with each upgraded space station in every planet/system) RTS game engines are not that crappy that they need a population cap to hide any bad performance. Either your computer sucks… or your computer sucks. In my experience, RTS games have been the most smooth playing genre of videogame. With or without population caps.

So you admit there is a population cap…..

Ask yourself this, is there an option to remove the population cap.

Please note that special units may take 2 or more unit supply in some games which make the population less than 1000 in the game

On my machine I’d probably be able to make 6,000 before I start seeing any hit but no one is ever going to do that.

tracking units is an O(n^2) problem. the problem get exponentially worse as n get bigger

1000^2 = 1000000

vs

6000^2 = 36000000

(6000^2 – 1000^2) / 1000^2 = 35

So you increased the load 35 times by adding 6 times more units

Population caps or not, it still doesn’t change that RTS games are the best performing games on the market. From what I’ve seen anyway.

Population caps or not, it still doesn’t change that RTS games are the best performing games on the market. From what I’ve seen anyway.

And did they solve the tracking problem? I would like to know because they can perhaps share their wisdom on how they solved it. Changing the tracking problem from n^2 to nlogn deserves alot attention. I want to read the paper. I mean i really. tracking is a complex problem

From the looks of it, they reduce population size. Made textures to near garbage. . Pathing is always an issue. I can go on and on. 1vs 1 format.

I rarely like to compare games in different genres but just because your latest rts runs well doesnt mean another genre can apply the same optimization.

I usually find games based on the design and sacrifices they made. It pretty obvious if you know what to look for

(edited by loseridoit.2756)

kitten was hoping to find news of potential coming optimization in here.

ive got a phenom II 965 running at 4.4ghz and terrible fps

people keep telling me to get an fx processor, but they have even weaker single core performance than phenoms do and since this game doesn’t take advantage of multi-threading. If i did i’d be spending $300 on a processor that when overclocked to its max would give me maybe 5 fps increase keeping me still below the 30fps i need.

Not worth it.

I have AMD/INtel CPUs. FX 6300 and i7 3770. Both are great CPU.

So I am not fan of INTEL or AMD.

I prefer you i5/i7 or FX6300/FX8320 they are all good CPUs.

Intel expensive and really good. AMD is cheap and has more cores.

I notice that AMD is trying to give best performance. Many benchmarks mostly cripple AMD CPUs…

I know that you heard for AMD mantle – cant wait. I can only hope that GW2 will support AMD Mantle. Actually right know i would rather support AMD because this company is trying to give us better performances. It would feel good when you see all 4/8 cores at 100% (i7) while running game…

Sorry for my “ENGLISH”

(edited by XFlyingBeeX.2836)

ive got a phenom II 965 running at 4.4ghz and terrible fps

Define terrible and in what kind of situations. I have a Phenom II X4 @ 3.4Ghz (unlocked X3), and I get 40-60+ in most PvE situations excluding group events which can drop down to 20-ish I believe, maybe a bit lower, but in any case, I find that pretty reasonable.