So what have I done.

Have:

Core i5-4690k 4.4 Ghz 1.1V and 4.4 Ghz Cache

Kingston RAM 8gb 2133 Mhz 10-11-10-24 1.68 V

Radeon R9 290 1090\1625 +50mV +10% Power (Catalyst 14.7 Beta)

7200 RPM WD 1 TB, Full Defrag and Optimized

Gigabyte z97-HD3

And 850W Chieftec

This runs GW2 on 30 -40 fps on full low Full HD (shadow off) in the EOTM, start waypoint. 80 in wayfarer. When someone appears on the screen fps goes down to 30. When i stand at one end of the map and look to other, get 20 fps.

Using DP-VGA Adapter to connect my VGA monitor to DP of the Radeon

Now the funniest. I switch on my integral video HD 4600, Turbo Boost Off. Connect my monitor vga-vga direct to the motherboard and start playing GW2 on that low settings. No fps drops. 70 fps always in wayfarer, 70 in EOTM, perfomance relates to HD 4600

GPU load on R9 – 100% (Screenshot under) . Temperatures – 75* (That occurs only if play with Vsync on in other games, normal temp for full load and 90% of Fan Speed is 88). But no core freq reductions or power savings. Memory sometimes goes to 150 and goes back.

GPU load on HD 4600 – Full load. Full clocks

When character window opened, fps goes to 30 and not less, not more than it. Only on R9

And that was before Feature Pack. Bougth new system a month ago. Installed GW2 – laaag

P.S. Average FPS – Battlefield 3 110 FPS, The Secret World – 85 FPS, Borderlands 2 – 140 FPS, Deus Ex – 150 FPS, World in Conflict – 120 FPS, Black Ops 2 – 90 FPS, Wargame – 100 FPS. Full Ultra

And 55* on cores of the processor, smooth, yeah

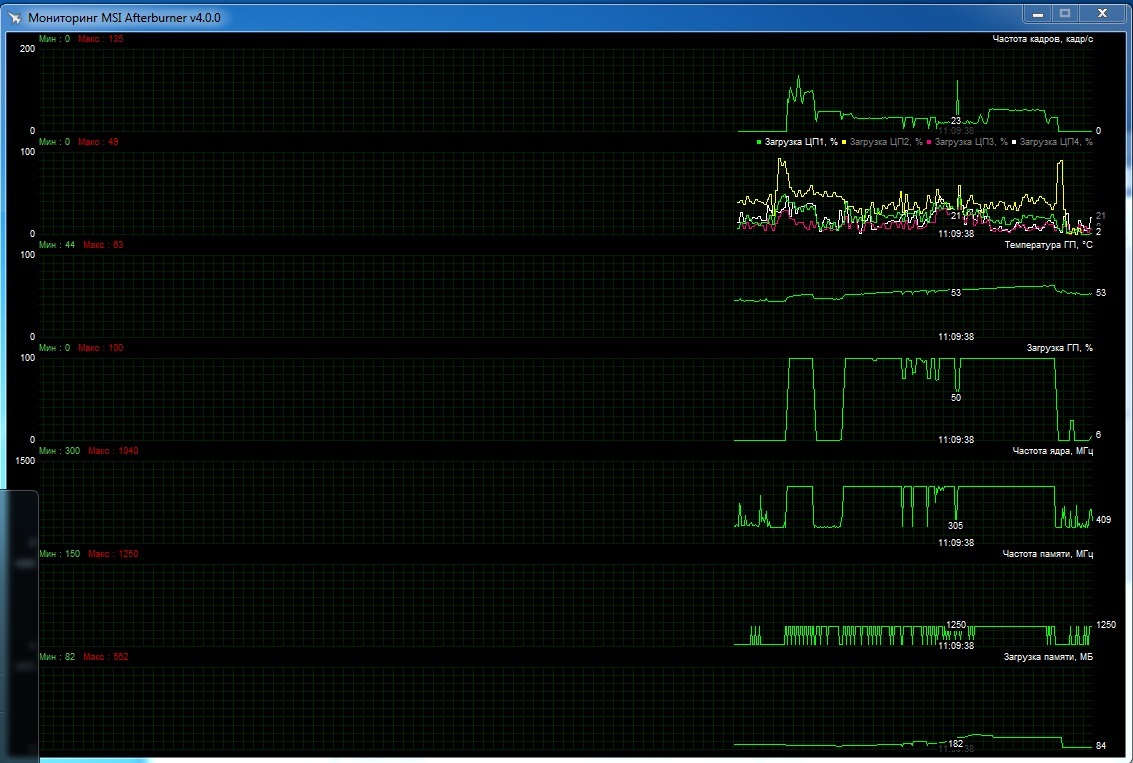

First collumn – FPS, Next – Processor load, GPU temp, GPU load, GPU core and mem clock, GPU mem load (EOTM 3 min start)