FOV (Field of View) Changes Beta Test - Feedback Thread

in Account & Technical Support

Posted by: VirtualBS.3165

Thanks for letting us zoom farther, it’s still valuable, although this is not really a complete fix, as many have said.

First of all, please make GW2 a HOR+ game! The concept of HOR+ FoV scaling should be really understood by everyone here, so that everyone knows the techical term for what they really are asking:

http://en.wikipedia.org/wiki/Field_of_view_in_video_games

http://www.wsgf.org/category/screen-change/hor

Again, please make GW2 a HOR+ FoV scaling game!!!

New nVidia driver - 310.33 Beta (w/ AO fix)

in Account & Technical Support

Posted by: VirtualBS.3165

New beta driver 310.33 released yesterday, it seems nVidia finally corrected the ambient occlusion bugs.

Also, some performance improvents for Kepler cards: http://www.geforce.com/whats-new/articles/nvidia-geforce-310-33-beta-drivers-released

Win Vista, 7 and 8 (64-bits): http://www.geforce.com/drivers/results/50294

Win Vista, 7 and 8 (32-bits): http://www.geforce.com/drivers/results/50291

Will test when I get home.

Lucid Virtu MVP and GW2? (HD3000 vs HD4000)?

in Account & Technical Support

Posted by: VirtualBS.3165

You have to re-install Virtu every time you update the nVidia drivers for i-mode to work properly again.

New nVidia drivers released today (10th October 2012)!

Windows (Vista, 7, 8) 32-bits – http://www.geforce.com/drivers/results/49892

Windows (Vista, 7, 8) 64-bits – http://www.geforce.com/drivers/results/49949

DriverVer = 02/10/2012, 9.18.13.0697

R306.97 (branch: r306_41-13)

Release notes: http://us.download.nvidia.com/Windows/306.97/306.97-win8-win7-winvista-desktop-release-notes.pdf

(edited by VirtualBS.3165)

These are the games that currently use PhysX:

http://physxinfo.com/

(you can click the blue i icon next to each one to see more info and a youtube video of the corresponding PhysX effects)

Its actually a shortcut for the Language Bar to scroll through installed keyboard layouts. If you didn’t disable it specifically, the US keyboard layout always comes pre-installed along your chosen keyboard layout. You have to actually remove it from the “Text Services and Input Languages” (Control Panel —> Change keyboards or other input methods —> General tab —> Installed services). After you remove it, the shortcut will no longer work.

The most impact for GW2 will be the MB+CPU+RAM upgrade first.

Intel stock cooler good enough to cool system for GW2?

in Account & Technical Support

Posted by: VirtualBS.3165

What is overclocking?

Simply put, overclocking refers to running a system component at higher clock speeds than are specified by the manufacturer. At first blush, the possibility of overclocking seems counter-intuitive—if a given chip were capable of running at higher speeds, wouldn’t the manufacturer sell it as a higher speed grade and reap additional revenue? The answer is a simple one, but it depends on a basic understanding of how chips are fabricated and sorted.Chip fabrication produces large wafers containing hundreds if not thousands of individual chips. These wafers are sliced to separate individual dies, which are then tested to determine which of the manufacturer’s offered speed grades they can reach. Some chips are capable of higher speeds than others, and they’re sorted accordingly. This process is referred to as binning.

Chipmakers often find themselves in a position where the vast majority of the chips they produce are capable of running at higher clock speeds, since all chips of a particular vintage are produced in the same basic way. So chipmakers end up designating faster chips as lower speed grades in order to satisfy market demand. This practice is of particular interest to overclockers because it results in inexpensive chips with “free” overclocking headroom that’s easy to exploit. That’s the magic of binning: it’s often quite generous. A great many of the CPUs sold these days, especially the low-end and mid-range models, come with some built-in headroom.

Overclocking can do much more than exploit a chip’s inherent headroom, though. It’s also possible to push chips far beyond speeds offered by even the most expensive retail products. Such overclocking endeavors usually require more extreme measures, such as extravagant cooling solutions, so they’re a little beyond the scope of what most folks will want to tackle.

The above is true for AMD CPUs. For Intel ones, keeping the explanation short, you need a CPU model that ends with the letter K, meaning its multiplier is unlocked.

There are alot of good beginner’s guides to overclocking out there. Here’s an example:

http://www.techradar.com/news/computing-components/processors/beginners-guide-to-overclocking-1040234

Most motherboards nowadays also support some form of auto-overclocking feature. Although not attaining the results you can get manually, its still pretty decent.

What Alxa.6527 said.

Also, every 2 months Tom’s Hardware does a System Builder Marathon, with PCs built for the $500, $1000 and $2000 price points:

http://www.tomshardware.com/reviews/How-To,4/Tweaking-Tuning,17/

Does pressing Ctrl+Shift or (Left) Alt+Shift fixes it?

That means you confirmed you hadn’t the US keyboard layout selected there?

Do you also have that bug when using a software keyboard?

(edited by VirtualBS.3165)

Nope, no effect on CPU temperature. About overclocking, using HPET can even have an advantage there in certain scenarios, so no worries.

Open the Windows control panel and search for “Region and Language”. Make sure you have everything set to “UK”. Also, make sure that under the “Text Services and Input Languages” you only have the UK keyboard selected as default (remove the US keyboard from there).

What ATI drivers are you using? Try this if you are on older drivers: http://support.amd.com/us/gpudownload/windows/Pages/radeonmob_win7-64.aspx

Any PC newer than 2005 will almost surely have HPET, even if the BIOS doesn’t show the option to turn it on/off. It’s part of the ACPI spec.

Has it happened more than once?

Intel stock cooler good enough to cool system for GW2?

in Account & Technical Support

Posted by: VirtualBS.3165

The stock cooler is dimensioned for the CPU to run at 100%, so you should be fine. Aftermarket coolers are generally only useful for overclocking.

That being said, you can always replace it later, if you really want to.

Have you installed Windows 7 Service Pack 1?

What is being done about AMD CPU performance?

in Account & Technical Support

Posted by: VirtualBS.3165

It would be really interesting to see anyone with an FX-8150 report Windows 8 figures. I’ve seen some early reports stating a 10% increase on certain games, due to the new Windows 8 CPU scheduler.

@Swordbreaker.2581, I wholeheartedly agree with that!

I’d sugest a SB cpu over a IB if you want to OC. So a i5 2500k would be nice. + Noctua NH-D14 heatsink&fan ..best air cooling you can get.

As for GPU i personaly think you should get a 500 series. .maybe a 560ti or 570. And put some money away to buy a 770 or 780 when they are released somtime late Q1/early Q2 next year.

I can vouch for the Noctua NH-D14, it is really a beast in every sense of the word!  Just be sure to use low profile RAM (I use some G.Skill Sniper) otherwise that cooler won’t fit (but being low helps alot on the VRM cooling).

Just be sure to use low profile RAM (I use some G.Skill Sniper) otherwise that cooler won’t fit (but being low helps alot on the VRM cooling).

What is being done about AMD CPU performance?

in Account & Technical Support

Posted by: VirtualBS.3165

@Aza.2105, do:

start /AFFINITY 55 /B /WAIT “D:\Guild Wars 2\Gw2.exe”

(yes, with the quotes there; quotes are needed to specify paths that use spaces)

(edited by VirtualBS.3165)

What is being done about AMD CPU performance?

in Account & Technical Support

Posted by: VirtualBS.3165

Where do you run that command? Because I have no idea how to do it. lol

Command prompt. But try the patches first, should have the same result.

http://www.anandtech.com/show/5448/the-bulldozer-scheduling-patch-tested

AMD and Microsoft have been working on a patch to Windows 7 that improves scheduling behavior on Bulldozer. The result are two hotfixes that should both be installed on Bulldozer systems. Both hotfixes require Windows 7 SP1, they will refuse to install on a pre-SP1 installation.The first update simply tells Windows 7 to schedule all threads on empty modules first, then on shared cores.

The second hotfix increases Windows 7’s core parking latency if there are threads that need scheduling. There’s a performance penalty you pay to sleep/wake a module, so if there are threads waiting to be scheduled they’ll have a better chance to be scheduled on an unused module after this update.

What is being done about AMD CPU performance?

in Account & Technical Support

Posted by: VirtualBS.3165

deltaconnected.4058 is absolutely correct. I really hope ANet improves the multithreaded workload, as that will benefit everyone.

About, the affinity mask trick, supposedly there were two Windows 7 hotfixes released in January that should alleviate that:

http://www.anandtech.com/show/5448/the-bulldozer-scheduling-patch-tested

AMD and Microsoft have been working on a patch to Windows 7 that improves scheduling behavior on Bulldozer. The result are two hotfixes that should both be installed on Bulldozer systems. Both hotfixes require Windows 7 SP1, they will refuse to install on a pre-SP1 installation.The first update simply tells Windows 7 to schedule all threads on empty modules first, then on shared cores.

The second hotfix increases Windows 7’s core parking latency if there are threads that need scheduling. There’s a performance penalty you pay to sleep/wake a module, so if there are threads waiting to be scheduled they’ll have a better chance to be scheduled on an unused module after this update.

Other than that, it seems Windows 8 has finally fixed these issues for good, almost a 10fps increase in World of Warcraft:

http://www.tomshardware.com/reviews/fx-8150-zambezi-bulldozer-990fx,3043-23.html

(edited by VirtualBS.3165)

How about this?

System Builder Marathon, August 2012: $1000 Enthusiast PC

http://www.tomshardware.com/reviews/build-a-pc-overclock-benchmark,3276.html

You can do Quad-SLI in two different configurations, each with different requirements:

- Using 2 graphics cards, each with 2 GPUs (e.g., two GTX 690s). You only need a motherboard capable of delivering at least PCI-E (2.0 or 3.0) at >=8x speed on two PCI-E slots, because each graphics card already connects its GPUs by a PCI-E bus.

- Using 4 graphics cards, each with 1 GPU (e.g., four GTX 680s). You need a motherboard with enough PCI-E lanes to support at least PCI-E (2.0 or 3.0) at >=8x speed on four PCI-E slots.

Since all GPUs share the same data, most Quad-SLI certified motherboards just use PCI-E bridge chips (like the PLX PEX 8747, for example) to compensate for fewer CPU PCI-E lanes.

(edited by VirtualBS.3165)

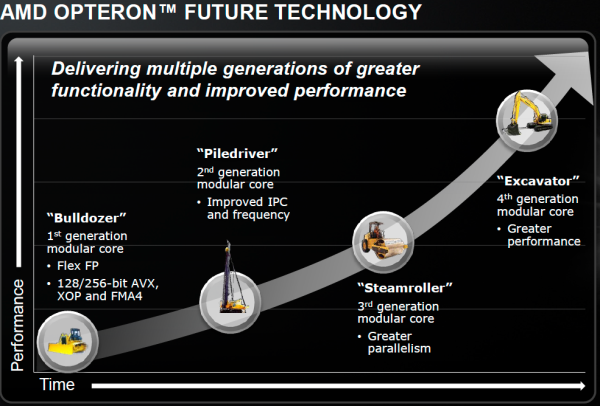

I’d say wait for piledriver benchmarks first, before making any decision. From what I’ve seen, it’s showing a pretty good performance increase, since they address some of the most glaring bulldozer architecture drawbacks.

limit FPS/refresh rate is limiting incorrectly ?

in Account & Technical Support

Posted by: VirtualBS.3165

Try using MSI Afterburner for limiting the FPS, it works really well.

What is being done about AMD CPU performance?

in Account & Technical Support

Posted by: VirtualBS.3165

And, again, for the 4th? 4th time?? This topic is not about AMD vs Intel. This thread was over on day one, but you babies keep flocking back stating the obvious. How long has the FX series been out? Almost a year now? You’d think after almost a year the intel fanboys would have gone home by then…

Here you go I cropped in the attachment this time because you are clearly have eye problems.

Get your facts straight. You are just refusing to see that the Phenom X6 and the FX-8150 are very different architectures. Past results are not assurance of anything. The bulldozer architecture has severe drawbacks in many areas, that may show up or not depending on the CPU task, and will show up especially in desktop performance, as the bulldozer architecture is heavily oriented to server workloads.

Bulldozer weak spots:

- Shared Op Decoders

- Less integer execution units

- High L2 cache latency

- High Branch Misprediction Penalty

- Low L1 Instruction Cache Hit Rate

Desktop Performance Was Not the Priority

No matter how rough the current implementation of Bulldozer is, if you look a bit deeper, this is not the architecture that is made for high-IPC, branch intensive, lightly-threaded applications. Higher clock speeds and Turbo Core should have made Zambezi a decent chip for enthusiasts. The CPU was supposed to offer 20 to 30% higher clock speeds at roughly the same power consumption, but in the end it could only offer a 10% boost at slightly higher power consumption.

http://www.anandtech.com/show/5057/the-bulldozer-aftermath-delving-even-deeper/12

(edited by VirtualBS.3165)

Actually that’s not entirely correct. Under a 64bit OS, a 32bit application (with LAA enabled) can access up to 4GB of RAM. While a 64bit application can access up to 8TB of RAM. Though with Windows licensing restrictions (much as with Server editions) the amount of RAM supported by the OS is far less than 8TB. The point is however, that a 64bit application can access in excess of 4GB RAM.

100% correct.

It is really all the same. It’s 4 GPUs interconnected by a PCI-E bus. The GTX 690 have two GPUs each, connected by a PCI-E 3.0 bridge chip.

One 690 is usually around 95-100% of the performance of two 680s in SLI.

http://www.anandtech.com/show/5805/nvidia-geforce-gtx-690-review-ultra-expensive-ultra-rare-ultra-fast/19

The GTX 690 doesn’t quite reach the performance of the GTX 680 SLI, but it’s very, very close. Based on our benchmarks we’re looking at 95% of the performance of the GTX 680 SLI at 5760×1200 and 96% of the performance at 2560×1600. These are measurable differences, but only just. For all practical purposes the GTX 690 is a single card GTX 680 SLI – a single card GTX 680 SLI that consumes noticeably less power under load and is at least marginally quieter too.

(edited by VirtualBS.3165)

What is being done about AMD CPU performance?

in Account & Technical Support

Posted by: VirtualBS.3165

Moz,

Thats what I wrote in my post in this thread. But I don’t think many people bothered to read it. The fact is the FX 8150 is the same as the 1100t (in single threaded situations). And yea those cpu dependent game proves that fact. But in GW2, the 1100t is 30% faster. When I noticed this, I wrote AMD right away. When they responded with their last email to me stating that Ncsoft and AMD are working together to improve performance on FX cpus. I thought they were kidding me.

I’m glad Tom’s hardware confirmed what they said and I’m happy my voice was actually heard.

Many people say that the FX series are bad cpus. This is only true when you take into consideration intel cpus. But when you compare prices (motherboard included) AMD comes to be a lot cheaper.

I sincerely hope they do get better performance on the FX’s. Intel is too comfortable at the moment in the high-end CPU business, and this is never good for any consumer. My first AMD CPU was a 386DX@40 MHz, and oh boy, did it rock!

Let’s hope GW2 doesn’t rely much on bulldozer’s weak spots.

What is being done about AMD CPU performance?

in Account & Technical Support

Posted by: VirtualBS.3165

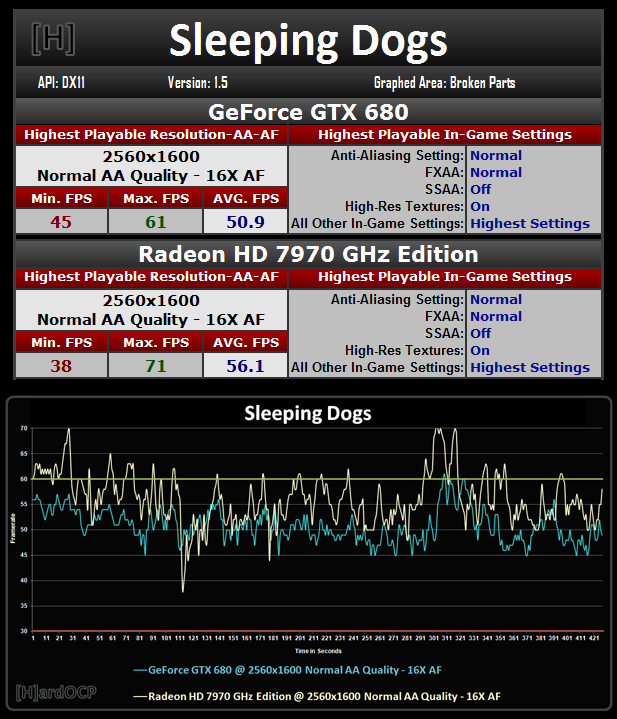

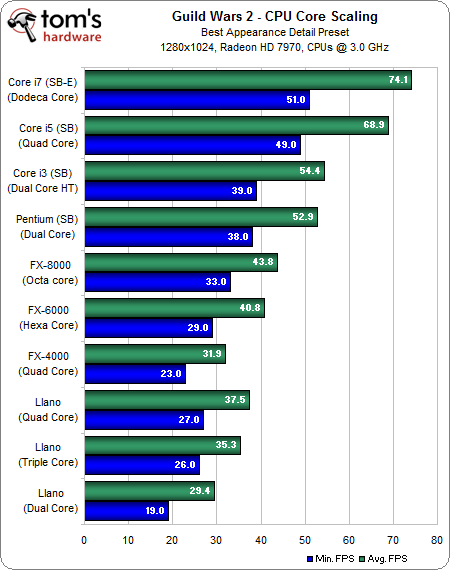

We’re focusing on minimal fps because unlike many other SP/MP games Guild Wars 2 and generally most mmos are CPU bound and not limited by the GPU. Hence why tom’s hardware reviewers adjusted the settings low and use high end GPUs to help show the CPU performance and not the GPU’s performance. This is why we look at the minimal fps; they show the most CPU intensive parts of the game, i.e ZERGS.

Yes, the minimum fps usually happens at the most intesive parts of the game. However you cannot accurately interpret them in a benchmark because it doesn’t state whether its an isolated case or if it happens a lot. It’s not a standard deviation. You can’t state with bar charts how often a particular gfx dips to low fps, you need a performance curve.

A videocard that dips once or twice into low minimum fps is very different than one that consistently goes there. Look at the example benchmark screenshot below. Would you say the GTX 680 is the better card, since it has a min fps of 45 vs the 7970 that has 38? Ofc not. The averages paint the better picture. (Taken from HardOCP).

A FX-8150 and a 1100T will go back and forth on synthetic benchmarks, but the point is they are synthetic and not real world gaming results. Look at World of Warcraft, Starcraft 2, and Civilization V which are CPU bound games; the FX-8150 performs similarly to a 1100T when it comes to CPU bound games. On the other hand the 1100T is performing significantly better (30% BETTER) than the FX-8150 when it comes to this game, Guild Wars 2.

True, the discrepancy is monumental. However, due to bulldozer’s architecture differences, it’s still a gamble which performance improvements can be done to alleviate this.

Overclocking high TDP CPUs is much more difficult than low TDP ones? Wrong. The black edition CPUs are known for being unlocked and having a high TDP for amazing overclocks. I honestly don’t know why you replied to this because you missed the point I was trying to make to that person; Let me give you an example off the wall example, “I’m going to buy this super duper energy efficient desktop computer! But the model of my car is a 2004 Hummer H2!”

Don’t confuse high TDP with high current leakage.

The tomshardware reviews you linked are irrelevant to this conversation since we were talking about Pentium Dual cores and not i3s. On top of that the games benchmarked are GPU bound and not CPU bound. I’m sure even old Athlons can maintain 60 fps minimal on these SP/MP games.

If you find the links irrelevant, then I really don’t know what else to say. The first link compared the FX-4100@3.6GHz with an i3-2100@3.1GHz that is very close to a Pentium G680. The second link is a $500 PC that uses a Pentium G680 in various benchmarks. You said “A Pentium Dual core might be a good choice over an FX CPU, if you want to pay $90 to play one certain game…” — this proves that a Pentium G680 can chug away nicely in many more than just one game…

Let’s compare old athlons with an old Pentium G850 then… Hmmm…

http://www.anandtech.com/bench/Product/121?vs=404

Here’s my attachment again; don’t let it go over your head too.

Lol. Hardly. I’ve probably used more AMD CPUs in my life than Intel ones. I just don’t think AMD is going in the right direction at the moment. They are distancing from Intel to go the ARM route. Lets see how that turns out.

(edited by VirtualBS.3165)

It is using all 6 cores, just one more than others. This can be related to a lot of different stuff, from game design to the network stack.

That said, how do you check what it is, for sure? Get a program called Process Explorer. It will show you in much more detail the programs/threads allocated to each CPU core.

What is being done about AMD CPU performance?

in Account & Technical Support

Posted by: VirtualBS.3165

You keep mentioning “best case scenario” like it means anything.

Yes, sorry the average fps. So tell me why I would care about fps over my monitor’s refresh rate or care more about average fps over my min. fps. Excluding min. fps is the most ridiculous thing I’ve ever heard.

Do you even know what an average of a distribution means? Trading the average for the minimum is trading a measure of centrality of a distribution that uses all data points vs one data point. This is why I generally don’t like when benchmark sites post minimum fps measures — if they posted the standard deviation, at least people would go read about what that means.

An underclocked FX-4000 @ 3.0ghz to stock 4.0ghz resulted in a 41-43% increase in performance while the 2500k gained 4 min. fps. As for an FX-4000 beating a G860 slighty at stock clocks, take in to account the FX-8150 doing 20-27% worse than an 1100T only in Guild Wars 2. Also take in to account the mediocre overclocking the G860 is able to accomplish.

An FX-8150 loses to an equally clocked 1100T. Here’s an FX-8150@3.6GHz vs 1100T@3.3GHz: http://www.anandtech.com/bench/Product/434?vs=203

If the G860 doesn’t need an overclock to get good results, why would there be a need to overclock it?

And yeah TDP a dual core vs a 4 core, I’ll take that into account when users use multi-monitors, multi-gpus, drive gasoline cars, and accidently leave their lights on.

Sorry, but no. Overclocking high TDP CPUs is much more difficult than low TDP ones. The FX-4100 is a 95W CPU — that’s the same as an i5-2500K. Inefficiency per Watt at its best.

A Pentium Dual core might be a good choice over an FX CPU, if you want to pay $90 to play one certain game…

Or maybe many other games also?

http://www.tomshardware.com/reviews/fx-4100-core-i3-2100-gaming-benchmark,3136.html

http://www.tomshardware.com/reviews/gaming-pc-overclocking-pc-building,3273.html

(edited by VirtualBS.3165)

What is being done about AMD CPU performance?

in Account & Technical Support

Posted by: VirtualBS.3165

Lets get this straight.

1) Yes, Tom’s Hardware knows how to benchmark games. Min fps on a CPU bound chart is still a best-case scenario. As soon as you put a lesser GPU in that system (or increase the resolution), everything will decrease. Read my post above.

2) If you want to compare CPU prices, do it in an unbiased way:

- i5-2500K priced at ~$220 — 68.9fps — 100%

- Pentium G860 priced at $90 — 52.9fps — 76.8%

- FX-4000 priced at ~$105-$120 —31.9fps -- 46.3%

Sorry, but there is no possible reason for choosing any AMD processor for this game, at this moment. I really hope that can change in the future.

What does it being best-case scenario have to with anything anyways? No one is talking about GPU performance.

I wasn’t talking about GPU performance either. Those are CPU performance numbers.

And there’s a problem with your percentages; it only takes into account the highest possible fps and excludes the most demanding parts (min. fps). There’s also another problem using this chart because all CPUs are clocked at 3.0ghz and doesn’t show the performance out the box or what would happen with overclocking potential. What this chart does show is clock for clock performance which is irrelevant unless you’re dumb enough to down clock your CPU on purpose to play games.

Getting a little bit bias there pal’

These are not based on highest possible fps, but average fps — huge difference there. There is no reason to compare minimum fps, other than seeing an estimate on the variation of the measure.

Lets do another comparison then:

- i5-2500K@3GHz priced at ~$220 — 68.9fps — 100%

- Pentium G860@3GHz priced at $90 — 52.9fps — 76.8%

- FX-4000@4GHz priced at ~$105-$120 —54.4fps -- 79%

A 1GHz overclock yielded 1.5fps on a best-case scenario over the Pentium G860… lol

Lets not even compare the TDP numbers between both architectures, if you have to pay for your energy.

Q6600@3.4 with HD6870 doesnt have any problems with this game on 1920×1080 highest settings. shaders set on Ultra. AA on. and Vsync on. fps drop in massive WvWvW is to around 24-30 fps range. (average fps in PvE is 38-60) its not a slide show. yes it is old and may become cranky in events like the Inquest Raid during the beta (but then the game wasnt optimised). i would say it can be compared to Dual Core I5 with HT.

any 6850 can be overclocked to 6870 performance.

My last CPU was a Q6600@3.6GHz. I’m now at an i7-2600K@4.5GHz. I used a GTX 560Ti@1GHz on both.

I was playing Deus Ex: HR at the time I changed the CPUs. I can tell you that going to the i7 almost doubled my framerate, at the same graphic settings. I was really… “Wow!”. There is no way that a Q6600 can be compared to any of the current CPU architectures.

My only remaining recommendation would be to overclock as much as you can. It seems AMD CPUs benefit a lot from high clocks in this game.

@Rudens.3245: The i5-3210M is a dual core CPU with hyperthreading (2 physical cores + 2 virtual cores), the i7-2670QM is a quad-core CPU with hyperthreading (4 physical cores + 4 virtual cores). The 630M is a 96 shader gfx while the 650M is a 384 shader gfx.

Tbh, both are bad choices, lol. The ideal would be that i7 with the 650M. If you really “have” to choose, i’d say the 650M is really much better than the 630M (as in more than 2x better).

The i5 is also an Ivy Bridge CPU (that i7 is a Sandy Bridge), so that also outweighs some of the i7 benefit.

(edited by VirtualBS.3165)

Did you get any performance increase from disabling core parking?

Extremely bad game performance. Tech questions.

in Account & Technical Support

Posted by: VirtualBS.3165

There’s no need to do anything in particular for uninstalling the nVidia drivers, their installation routine is pretty robust since some years ago.

Just uninstall the ones you have currently, reboot if the uninstall routine asks you to, and install the ones you want with “Custom install” and “clean install” selected. Also be sure to unselect the stuff you don’t use from the driver (if you don’t have a 3D monitor or TV that you link the gfx to, you can unselect the 3D Vision stuff; if you don’t use the HDMI audio, you can also unselect that). In my case I only leave checked (besides the driver ofc) the nVidia updater and the PhysX package. Less bloat, less problems.

Before reverting to the 296.10, try the 306.63. Just install them on top of the 306.23, using the same procedure above. No need to uninstall first — like I said, their install routine is pretty robust at this point.

(edited by VirtualBS.3165)

New Rig. Will I notice a difference in GW2?

in Account & Technical Support

Posted by: VirtualBS.3165

@IPunchedyour family.7294, you can even start by trying the auto-overclock features of your motherboard. That should give you a nice performance boost even if you don’t have the time to read through all o/c stuff.

I have my i7-2600K@4.5GHz with every power-saving feature on, LLC at just one notch above minimum and -0.045 Vcore offset (yes, minus). In the end, it all just depends on the silicon you get.

What is being done about AMD CPU performance?

in Account & Technical Support

Posted by: VirtualBS.3165

CPU benchmarks using game engines are made so as to answer the question “If you had a GPU with infinite performance, how far would your CPU be able to push the game?”. That’s why a 7970@1280x1024 is used, so that the GPU is not the bottleneck, the CPU is.

Please note Tom’s Hardware probably knows how to benchmark games and more than likely don’t stare at sky or walls to make their reviews; surely the min. fps means the most CPU intensive moments, i.e zergs…

I also don’t know how many more times I have to post these, it’s like you Intel Faboys have blinders on strictly for any relevant information regarding AMD CPUs.

Lets get this straight.

1) Yes, Tom’s Hardware knows how to benchmark games. Min fps on a CPU bound chart is still a best-case scenario. As soon as you put a lesser GPU in that system (or increase the resolution), everything will decrease. Read my post above.

2) If you want to compare CPU prices, do it in an unbiased way:

- i5-2500K priced at ~$220 — 68.9fps — 100%

- Pentium G860 priced at $90 — 52.9fps — 76.8%

- FX-4000 priced at ~$105-$120 —31.9fps -- 46.3%

Sorry, but there is no possible reason for choosing any AMD processor for this game, at this moment. I really hope that can change in the future.

New Rig. Will I notice a difference in GW2?

in Account & Technical Support

Posted by: VirtualBS.3165

You can easily OC that CPU to 4.2, remember to increase the multiplier not the baseclock. Disable C1E and Speedstep. Leave the CPU phase control at optimised and leave it at t-probe for thermal consistency. LLC (load line calibration) should be ultra high and you can probably get away with 1.24ish as vcore. Leave the rest.

Chill on that LLC@max, its not really healthy unless absolutely necessary. Vdroop is a “feature”, not a “bug”.

Here’s a good starting point:

http://www.overclock.net/t/1198504/complete-overclocking-guide-sandy-bridge-ivy-bridge-asrock-edition

Please test your system stability with the post I linked before. Then you can know for sure if it’s a hardware or software problem.

Other games working fine, unfortunately, is no assurance of anything.

Yeah thats my project for this weekend. Going to clean her out as well as reapply thermal compound. Its almost 2 years old now so can’t hurt to redo the paste.

Make sure you apply it correctly!

Also, remember that most laptops don’t use thermal paste, but adhesive thermal stickers, so don’t remove those if you don’t see any screws holding the heatsinks.

(edited by VirtualBS.3165)

What is being done about AMD CPU performance?

in Account & Technical Support

Posted by: VirtualBS.3165

4100 is getting 41 min. fps @ 4ghz It’s really not that bad when you OC. I wouldn’t worry about it

Kalec.6589 is correct, those CPU benchmarks show a best in case scenario, meaning its only going downhill from there.

CPU benchmarks using game engines are made so as to answer the question “If you had a GPU with infinite performance, how far would your CPU be able to push the game?”. That’s why a 7970@1280x1024 is used, so that the GPU is not the bottleneck, the CPU is.

What is being done about AMD CPU performance?

in Account & Technical Support

Posted by: VirtualBS.3165

Like i said in my caveat. Parts are already ordered. Though with the overclocking and the fans i have, would 4.5 GHz be doable with the stock cooler? I do plan to upgrade to bulldozer when I get a chance.

Stock coolers generally suck at overclocking, I won’t really expect you get more than 4.0GHz, and even that may be a stretch. Really, if you still have the chance to change your order, do so.

Your CPU is already bulldozer, piledriver is coming now and steamroller next year.

(edited by VirtualBS.3165)